The robots are not taking over

First published in spiked, December 2014

First published in spiked, December 2014Stephen Hawking may be scared, but AI promises to help, not hinder us.

Do you know the most dangerous threat facing mankind? Is it climate change? Ebola? ISIS? Or, to follow President Obama, is the threat on a par with Ebola and ISIS and going by the name of Vladimir Putin? After all, a columnist on The Sunday Times recently compounded the distaste by describing the Russian leader as Putinstein – in the process revealing how little she knew of Mary Shelley’s seminal anti-experimentation novel Frankenstein (1818), whose title refers to a doctor inventor, not his sad and vengeful robotic construct.

In fact, the biggest threat facing mankind is one that has in some ways only just been discovered: artificial intelligence (AI). The physicist Stephen Hawking has said that AI could become ‘a real danger’ in the ‘not-too-distant’ future. Hawking added that ‘the risk is that computers develop intelligence and take over. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.’

That Hawking himself is aided by computers in his speech is an irony. For the fact is that computers are likely to remain a help much more than a hindrance for many years to come. Sure, they might run amok; but that will be to do with bad or malevolent programming, not the arrival of true intelligence.

Like the IT boosters of Silicon Valley, Hawking invokes Moore’s Law. Yet it is one thing to note the increase in computer power over the years; it is quite another to confer on semi-conductors the power to think – and, in particular, the power to think in a social way, using the insights of others and the prevailing zeitgeist to form novel and creative conclusions. Computers are not ‘smart’, any more than cities are. They cannot form aesthetic, ethical, philosophical or political judgments, and never will. These are the special faculties of humankind, and no amount of electrons – or ‘digital democracy’, for that matter – can substitute for them.

In fact, the fear of AI is really a form of hatred for mankind. It marks a new level of estrangement from the things we make, and a rising note of nausea around innovation. That is why professor Nick Bostrom, director of the University of Oxford’s Future of Humanity Institute (which is distinct, please note, from Cambridge’s Centre for the Study of Existential Risk), says that it’s ‘non-obvious’ that more innovation would be better. Well, maybe; but it recently took me about 90 minutes to go by rail from London Paddington to Bostrom’s Oxford, so a bit more innovation in the form of high-speed trains would not go amiss in Britain today.

Hawking and Bostrom are not isolated individuals. In the middle of all today’s crazed euphoria about IT, complete with the taxi application Uber being valued at $41 billion, there is a profound and bilious feeling of apprehension about where IT might lead. Thus, when nothing like AI exists after decades of research in the field, a leading article in the Financial Times intones that pioneers in the field ‘must tread carefully’.

Yes, IBM’s Watson supercomputer recently won the gameshow Jeopardy!. But did it know it won? No – the viewers did, but the Giant Calculator did not. Just because a machine passes the Turing test, in that it looks intelligent, that doesn’t mean that it is intelligent.

Readers of spiked will understand that. But my smartphone, into which I have dictated this, won’t.

Fmr President of Kenya on Trump cutting off foreign aid:

“Why are you crying? It’s not your government, he has no reason to give you anything. This is a wakeup call to say what are we going to do to help ourselves?”

America first is good for the world.

Our entire Green Socialist establishment should be banged up under the ‘Online Safety’ laws, for spreading demonstrable lies (the ‘climate crisis’), causing non-trivial harm to the industrial working class, ordinary drivers, farmers, taxpayers etc, etc.

#Chagos? #Mauritius PM Navin Ramgoolam "is reported to want Starmer to pay £800m a year, plus ‘billions of pounds in #reparations’." (14 January) https://www.spiked-online.com/2025/01/14/the-chagos-islands-deal-is-an-embarrassment/

Now the Torygraph wakes up https://telegraph.co.uk/gift/1ff8abbb462cd609

Read @spikedonline - first with the news!

Articles grouped by Tag

Bookmarks

Innovators I like

Robert Furchgott – discovered that nitric oxide transmits signals within the human body

Barry Marshall – showed that the bacterium Helicobacter pylori is the cause of most peptic ulcers, reversing decades of medical doctrine holding that ulcers were caused by stress, spicy foods, and too much acid

N Joseph Woodland – co-inventor of the barcode

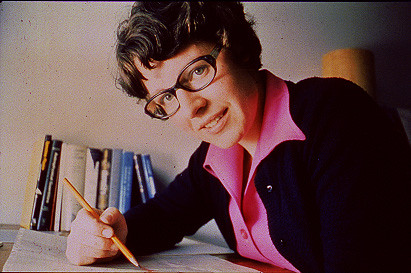

Jocelyn Bell Burnell – she discovered the first radio pulsars

John Tyndall – the man who worked out why the sky was blue

Rosalind Franklin co-discovered the structure of DNA, with Crick and Watson

Rosalyn Sussman Yallow – development of radioimmunoassay (RIA), a method of quantifying minute amounts of biological substances in the body

Jonas Salk – discovery and development of the first successful polio vaccine

John Waterlow – discovered that lack of body potassium causes altitude sickness. First experiment: on himself

Werner Forssmann – the first man to insert a catheter into a human heart: his own

Bruce Bayer – scientist with Kodak whose invention of a colour filter array enabled digital imaging sensors to capture colour

Yuri Gagarin – first man in space. My piece of fandom: http://www.spiked-online.com/newsite/article/10421

Sir Godfrey Hounsfield – inventor, with Robert Ledley, of the CAT scanner

Martin Cooper – inventor of the mobile phone

George Devol – 'father of robotics’ who helped to revolutionise carmaking

Thomas Tuohy – Windscale manager who doused the flames of the 1957 fire

Eugene Polley – TV remote controls

0 comments